Introduction

Choosing the right AI model for your business is tricky. Almost every week brings a new “breakthrough” or “revolution” within the industry. So, how does one get caught up in all this? Which free AI tools are reliable and bring the most value? It’s high time to shed some light on artificial intelligence, automation, and large language models (LLMs).

In this article:

- 1. What’s Changed Recently

- 2. Top AI Models in Business

- 3. Best Free AI Models for Business in 2025

- 4. Understanding Open-Source AI Models

- 5. Free AI Tools for Business in 2025

- 6. AI Ethics in 2025

- 7. Key Considerations for AI Model Selection [2025 Update]

- 8. Free AI Models for Business | 2025 Summary

What’s Changed Recently

- GPT‑5 has arrived: OpenAI officially launched GPT‑5 on August 7, 2025. It’s now integrated into ChatGPT for all users (including free tiers) and is accessible via API. The model delivers faster responses, “vibe coding,” and task–adaptive behavior across standard, mini, and nano variants.

- Claude 3.5 Sonnet remains a strong alternative: Anthropic’s model is still recognized for enterprise‑strength reasoning, fast performance, and affordability—delivering top benchmark scores, including coding, vision, and nuanced output. It's available via Anthropic API, Amazon Bedrock, and Google Vertex AI.

Before You Read | Useful Definitions

- AI model – A set of algorithms trained on data to perform specific tasks like internet research, image recognition, or language translation.

- LLM (Large Language Model) – A type of AI model specializing in natural language processing and generation. These models possess a deep understanding of language, which enhances their ability to generate realistic visuals and offer meaningful output.

- Token – A unit of text AI models use for input and output, often corresponding to words or parts of words. It is a measure of AI operational cost.

- Constitutional AI principles – A set of ethical guidelines that certain AI models follow to ensure safe and reliable usage. Community forums play a crucial role in discussing and debating the implications of open-source AI models, providing a platform for enthusiasts to engage in dialogue about evolving standards.

Top AI Models in Business

Artificial intelligence (AI) can be both a blessing and a curse in business.

On the one hand, it can unlock multiple resources within an enterprise, making processes highly efficient. Conversely, it may get out of control – especially if not handled correctly. No matter which of those viewpoints you lean towards, one thing is certain: it is no longer possible to ignore growing AI potential.

So, which AI models best suit your business?

Before You Read | Useful Definitions

- AI model – a set of algorithms trained on data to perform specific tasks like internet research, image recognition, or language translation.

- LLM (Large Language Model) – a type of AI model specializing in natural language processing and generation. These models possess a deep understanding of language, which enhances their ability to generate realistic visuals and offer meaningful output.

- Token – a unit of text AI models use for input and output, often corresponding to words or parts of words. It is a measure of AI operational cost.

- Constitutional AI principles – a set of ethical guidelines that certain AI models follow to ensure safe and reliable usage. Community forums play a crucial role in discussing and debating the implications of open-source AI models, providing a platform for enthusiasts to engage in dialogue about evolving standards.

Best Free AI Models for Business in 2025

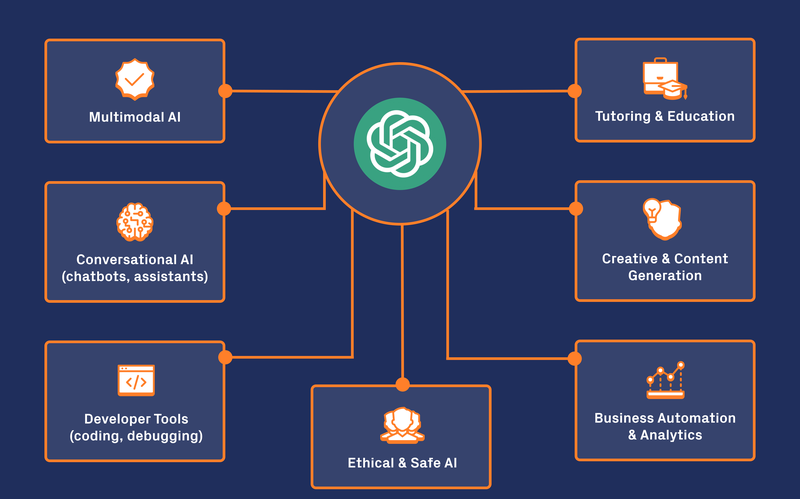

As of August 7, 2025, the flagship model for business AI is GPT-5, now powering ChatGPT for all users, including those on the free tier. GPT-5 offers:

- dramatically improved accuracy, reasoning, and contextual understanding over previous models;

- expert-level outputs, less frequent hallucinations, and greater transparency regarding limitations;

- API variants for developers, including GPT-5, GPT-5-mini, and GPT-5 Pro, catering to different latency and cost needs;

- free users receive access to core GPT-5; paid tiers (Plus, Team, Enterprise) offer higher usage, API integrations, and advanced reasoning features.

Older models like GPT-3.5 and GPT-4-Turbo are now largely legacy, with most organizations and tools migrating to GPT-5 for new projects. GPT-5 is recognized by enterprises for its excellence in automation, decision support, software development, finance and health-related applications.

Key advantages:

- strongest reasoning and accuracy to date, with real-world expert performance;

- secure and transparent, with enhanced safeguards against misuse;

- available natively in Microsoft 365 Copilot, Azure AI, and GitHub Copilot through deep integration with Microsoft.

GPT-5 Model Family | OpenAI [August 2025 Update]

OpenAI’s current lineup for ChatGPT and API users is centered around the GPT-5 family, which has replaced legacy models like GPT-3.5, GPT-4, and GPT-4-Turbo. The following tiers are now available:

- Free access: all users (including those on the free plan) now receive ChatGPT powered by GPT-5 (standard), which provides advanced reasoning, multimodal input (text, image, and audio), fast response times, and industry-leading accuracy for general-purpose AI tasks.

- ChatGPT Plus ($20/month): paid subscribers enjoy higher usage limits, priority access during peak times, and early availability of GPT-5 Pro, delivering even better performance for advanced and business-critical use cases. Pro subscribers ($200/month) have unlimited access and higher compute settings for the most demanding workflows.

- API access: developers can choose between GPT-5 variants:

- GPT-5: Full capability, advanced reasoning ($1.25/million input tokens, $10/million output tokens).

- GPT-5 Mini: Cost-optimized, high speed ($0.25/million input, $2/million output).

- GPT-5 Nano: Ultra-cheap, for latency- and cost-sensitive uses ($0.05/million input, $0.40/million output).

So, what are the key differences compared to the legacy GPT-3.5 & GPT-4-Turbo models?

| Model | Availability | Speed | Cost (API) | Capabilities | Features and use cases |

|---|---|---|---|---|---|

| GPT-3.5 | Mostly legacy, limited use | Fast | ~$0.002 / 1,000 tokens | Basic natural language understanding, quick Q&A | Good for fast, low-cost casual use and basic automation |

| GPT-4 | Legacy, replaced by GPT-5 | Moderate | Higher than GPT-3.5 (varies) | Advanced NLP, better reasoning, some multimodal | Stronger content generation, customer support bots |

| GPT-4-Turbo | Deprecated, replaced by GPT-5 | Fastest among GPT-4 variants | Cheaper than GPT-4 ordinary | Improved efficiency, handles text + limited images | Efficiency optimized for paid ChatGPT Plus users |

| GPT-5 (Standard) | Default for all users (free and paid) | Fast | Included in subscription/free | Multimodal (text, image, audio), expert reasoning | Highest accuracy, fewer hallucinations, broadest use |

| GPT-5 Pro | Paid Plus/Pro tiers | Fastest, priority | Higher, varies by usage tier | All GPT-5 features plus expanded context, uptime | For demanding workflows, enterprise-grade applications |

GPT-5 Major Upgrades Over Prior Models:

- Unified intelligence: no need to switch models; GPT-5 dynamically optimizes speed and reasoning per request.

- Fewer hallucinations: significant reduction in fabricated content, safer completions, and more transparent reporting of model certainty.

- Scalability: all business users now get enterprise-level language AI, with legacy GPT-3.5 and GPT-4-Turbo completely phased out for new users since August 2025.

- API flexibility: cost-saving features like prompt caching, adjustable reasoning effort, verbosity control, and support for agentic workflows.

In summary, the evolution from GPT-3.5 through GPT-4 and GPT-4-Turbo to GPT-5 reflects a continuous push toward more capable, efficient, and safer AI models. For most current business and casual use cases, GPT-5 (or its variants mini and nano) is your go-to choice, rendering older models largely legacy options reserved for niche compatibility.

Gemma 2 & Gemini 2.0 | Google AI Models

Integrating AI models without reliance on expensive cloud infrastructure remains a significant advantage in developing scalable AI projects. Google’s Gemma 2 family offers such a solution as a free, open-source model suite optimized for local deployment on user hardware. Meanwhile, larger models like Gemini 2.0 provide enhanced accuracy and flexibility with cloud-based access but come with computational costs.

Gemma 2 Overview

- Open-source and free to use locally, but requires running on compatible hardware.

- Available in multiple sizes, notably 2B, 9B, and 27B parameters, with the 27B Gemma 2 model delivering competitive performance to much larger proprietary models, achieving excellent inference speed and cost efficiency.

- Gemma 2 models are built with a redesigned architecture emphasizing performance and inference efficiency; they run well on a variety of hardware, from gaming laptops to data center GPUs.

- Two main variants:

- Flash: Fast and well-suited for reasoning tasks.

- Flash Lite: Smaller and ultra-fast, optimized for lightweight, edge AI applications.

- Ideal for self-hosted applications such as automation, content generation, and customer support enhancements.

- Not multimodal: Gemma 2 currently supports text-only input/output.

Gemini 2.0 Overview

- Proprietary flagship model designed for high-end AI applications, including research, enterprise AI, and advanced automation.

- Supports multimodal inputs (text, images, audio) and integrates deeply with Google’s ecosystem, including Google Bard (Gemini), YouTube, and Google Maps.

- Access typically involves paid cloud API usage, though limited free access is available in some Google Bard/Gemini Pro tiers.

- Offers Gemini Nano, a smaller version running locally on devices like Pixel phones for free, providing some offline AI capabilities.

- Recommended for advanced AI tasks requiring multimodal input and high scalability.

| Feature | Gemma 2 (Free-tier access) | Gemini 2.0 (Paid API) |

|---|---|---|

| Open Source | Yes, fully open-source | No, proprietary |

| Deployment | Local hardware (self-hosted) | Cloud-based API |

| Model Sizes | 2B, 9B, 27B parameters | Larger, enterprise-grade models |

| Input Type | Text only | Multimodal (text, images, audio) |

| Use Case Focus | Lightweight applications, edge AI, automation | Research, enterprise solutions, advanced AI workflows |

| Free Access | Fully free for local use | Limited free access via Google Bard/Gemini Pro, otherwise paid |

| Integration | Supports deployment via Hugging Face, Google Cloud, local environments | Deep integrations with Google services (YouTube, Maps, Bard) |

| Hardware Requirements | Moderate to high (requires sufficiently powerful CPUs/GPUs) | Requires Google Cloud or similar infrastructure |

Gemma 2 provides high-performance, efficient and free-to-use AI models optimized for local execution and edge use cases where privacy and cost control are priorities. It offers cutting-edge efficiency and strong reasoning power in text tasks, empowering organizations and developers to run powerful AI on their own hardware.

Gemini 2.0, by contrast, caters to users and enterprises needing advanced multimodal AI capabilities and cloud scalability with deep integration into Google's ecosystem, but typically involves usage fees tied to computational costs.

This update reflects Google’s AI model landscape latest as of mid-2025, emphasizing Gemma 2 as an accessible open-source solution and Gemini 2.0 as a paid, high-end cloud AI platform with expanded capabilities.

LLaMA AI Model | Meta

Meta, formerly known as Facebook, continues to be a major player in the AI space with its influential LLaMA (Large Language Model Meta AI) series of models. Originally developed for natural language processing (NLP) tasks such as text generation, summarization, multilingual translation, and conversational AI, the LLaMA family has rapidly evolved into a cutting-edge suite of models used across research, business, and specialized domains like healthcare and finance.

Key Features and Latest Versions

- LLaMA 4 (Flagship Released April 2025), introduces revolutionary capabilities:

- Multimodal inputs: supports processing of not only text but also images, enabling richer data understanding.

- Mixture-of-Experts (MoE) architecture: dynamically activates model experts on a per-request basis, providing scalability and efficiency at massive parameter counts.

- Massive context windows: ranging up to 10 million tokens in the Scout variant and 1 million tokens in the Maverick variant, enabling analysis of extraordinarily large documents and datasets.

- Superior performance benchmarks: LLaMA 4 matches or exceeds GPT-4o and other industry leaders in various NLP, reasoning, and coding benchmarks.

- LLaMA 3.1 (High-Performing Predecessor)

Released in 2024, LLaMA 3.1 (up to 405B parameters) remains widely used in research and commercial settings, notable for:- enhanced reasoning, multilingual support, and code generation;

- a large 128K token context window suitable for complex conversation and document understanding;

- continued use in academic research and enterprise projects under Meta’s licensing terms.

- LLaMA API and Ecosystem

In 2025, Meta introduced limited preview LLaMA API offerings to facilitate easier integration by developers and businesses without requiring full on-premise deployment. Platforms like Hugging Face host several LLaMA model variants, allowing seamless fine-tuning and deployment.

Open-Source and Licensing

While early LLaMA models like 2 and 3 were released with open weights under research/non-commercial licenses, LLaMA 4’s availability includes a combination of open-sourced smaller variants and controlled, licensed access for the largest and most powerful MoE models.

Businesses interested in commercial or scaled deployments should consult Meta’s licensing conditions and those of third-party hosts (e.g., Hugging Face) to understand obligations and potential fees.

Running LLaMA models locally or in private clouds ensures superior control over data privacy, regulatory compliance, and vendor independence.

Typical Use Cases

LLaMA models are highly versatile and suited for a wide range of applications, particularly where control, customization, and cost-efficiency are critical. Key scenarios include:

- Academic research and testing of large language models with full transparency and adaptability.

- General NLP tasks: text generation, summarization, classification, translation, question answering.

- Cost-sensitive production environments: Avoiding ongoing API call charges by self-hosting.

- Domain-specific customization: Fine-tuning models for healthcare reports, financial analytics, legal documents, and more.

- Enterprise AI deployments requiring large context understanding and multimodal insights.

- Real-time analytics and customer service automation powered by large context windows and powerful reasoning.

- Advanced coding assistance, debugging, and software development workflows integrating LLaMA’s strong understanding of programming languages.

Meta's LLaMA | Summary

Meta’s LLaMA series represents one of the most advanced and flexible open-source AI ecosystems in 2025. The new LLaMA 4 flagship, with its multimodal and MoE innovations, sets a high bar for performance and scalability, rivaling proprietary models like OpenAI’s GPT-5 and Google’s Gemini series. At the same time, LLaMA’s open licensing (for many variants), large community support, and focus on privacy make it especially attractive for enterprises seeking both power and control.

For organizations considering large-scale, customizable AI deployments that respect data sovereignty and cost constraints, LLaMA stands as a compelling choice in the modern AI landscape.

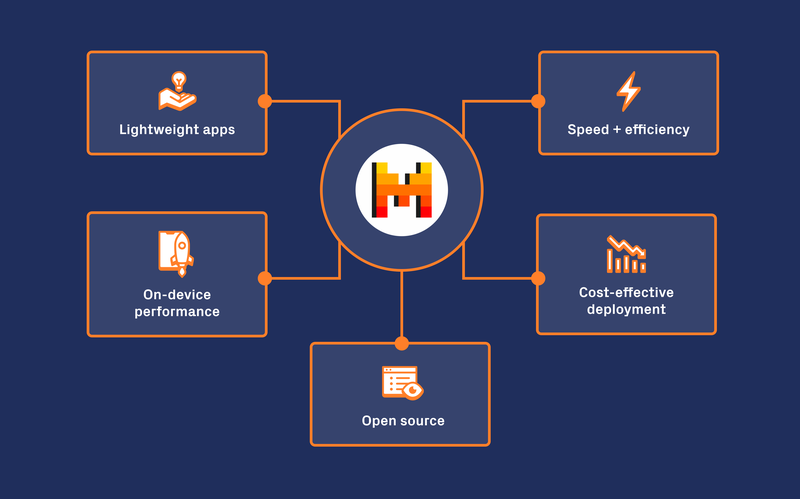

Mistral 7B & Mixtral Models | Mistral AI

Mistral AI, founded by former Meta and Google DeepMind engineers, has quickly become a leading force in open-weight AI models, focusing on privacy, security, and performance. The ability to run these models in-house significantly reduces risks related to exposing sensitive business data to third-party APIs, making them highly attractive for enterprises concerned with data control.

Mistral’s flagship AI models are:

- Mistral 7B. A 7.3 billion parameter dense transformer model, Mistral 7B delivers exceptional performance and efficiency well beyond what is typical for its size.

Key features include:- outperforming larger open-source models like LLaMA 2 13B across many NLP benchmarks, including reasoning, comprehension, and code generation;

- incorporation of attention mechanisms such as Grouped-Query Attention (GQA) and Sliding Window Attention (SWA), optimizing for faster inference and reduced memory usage, with a context window of 32K tokens;

- released under an Apache 2.0 license, allowing unrestricted commercial use and modification;

- highly optimized for local deployment on consumer-grade hardware, enabling real-time applications such as chatbots, summarization, and coding assistants;

- a fine-tuned variant, Mistral 7B Instruct, demonstrates strong instruction-following and outperforms comparable models at its parameter scale.

- Mixtral. A Mixture of Experts (MoE) model, Mixtral significantly scales the capabilities of Mistral 7B by activating only a subset of its 12.9 billion active parameters selected from 45 billion total parameters per query.

Advantages include:- Improved performance and efficiency compared to dense models of similar or larger sizes.

- Outperforms models like GPT-3.5 and LLaMA 2 70B on reasoning, language understanding, and code generation benchmarks.

- Delivers large-scale AI performance while maintaining faster inference and lower running costs due to sparse expert activation.

- Can be deployed on-premises or hosted on platforms that support MoE architecture, though it requires more complex infrastructure and resource management than standard dense models.

Benefits and Use Cases

These free and open-source models offer enterprises the flexibility and control necessary for privacy-sensitive or cost-conscious AI initiatives. Typical applications include:

- high-quality content generation for marketing, design, and customer engagement;

- chatbots and conversational AI with strong reasoning and language capabilities;

- coding assistance and software development tools;

- edge AI and applications requiring fast, efficient local inference;

- business automation with compliance and data security in mind.

| Feature | Mistral 7B | Mixtral |

|---|---|---|

| Model Type | Dense transformer | Mixture of Experts (MoE) |

| Parameter Count | 7.3 billion | 12.9 billion active (from 45 billion total) |

| Performance Level | Excels at simpler/general NLP tasks | High performance for complex, large-scale tasks |

| Hardware Requirements | Moderate, suitable for consumer-grade GPUs | Higher, requires infrastructure to manage experts |

| Deployment Complexity | Easier to deploy and fine-tune | More complex deployment and resource management |

| Application Fit | Lightweight applications, real-time uses | Enterprise-grade AI, large data and compute loads |

Jamba Model for Code Generation | AI21 Labs

AI21 Labs specializes in advanced natural language processing (NLP) technologies and offers a suite of high-performance AI models designed for tasks like text generation, comprehension, semantic search, and code generation. Among their flagship offerings is the Jamba-Instruct model, an instruction-tuned large language model (LLM) comparable in sophistication to GPT-4 and Claude.

Key Features of Jamba-Instruct

- Hybrid Architecture: Jamba is based on a novel hybrid model architecture combining state-space models (SSM) with Transformer attention mechanisms, providing optimized performance, quality, and cost-efficiency.

- Large Context Window: Jamba-Instruct supports an unprecedented 256,000-token context window, allowing it to process extremely long documents—equivalent to a 400-page novel or an entire fiscal year’s financial filings—without needing manual chunking or segmentation.

- Instruction-Tuned for Enterprise: the model is fine-tuned to follow complex instructions reliably, making it well-suited for sophisticated enterprise workflows such as detailed Q&A, structured reporting, and step-by-step reasoning.

- Safety and Reliability: it includes built-in safety guardrails and chat capabilities, reducing hallucinations and ensuring outputs are accurate, relevant, and appropriate for commercial deployment.

- Multimodal Creativity: Jamba can integrate with text-to-image generation tools, enhancing its usefulness for creative professionals and developers who need high-quality image generation alongside language tasks.

- Cost-Effectiveness: Jamba-Instruct is designed to operate with a smaller cloud footprint while maintaining leading-edge performance, making it attractive for enterprise-scale deployment without prohibitive costs.

Jamba-Instruct: Applications and Use Cases

- Code Generation: streamlining software development by turning natural language descriptions into functional and efficient code snippets.

- Document Understanding: accurately summarizing, querying, and extracting insights from long, complex documents such as legal texts, financial reports, and technical manuals.

- Instruction Following: handling structured and detailed instructions, such as tutoring, creating study materials, or generating content within precise guidelines.

- Conversational AI and Customer Support: building chatbots capable of sustaining long conversations with context awareness and nuanced understanding.

- Marketing Content Creation: crafting engaging, instruction-based marketing copy, product descriptions, and other creative materials.

Integration and Availability

- AI21 Studio: AI21 Labs provides a platform called AI21 Studio that allows businesses and developers to build sophisticated language applications on top of the Jamba models.

- Cloud Access: Jamba-Instruct is available through major cloud platforms, including Microsoft Azure AI Studio (as serverless API via Azure’s Models-as-a-Service) and Amazon Bedrock, facilitating scalable enterprise adoption.

- Flexible Pricing and Trials: AI21 Labs offers usage-based pricing models alongside free trials to enable businesses to explore and test their models cost-effectively.

When to Use Jamba-Instruct?

- When you need to execute precise, instruction-based AI tasks requiring structured outputs or step-wise reasoning.

- For content creation that follows specific editorial or compliance constraints.

- When you require detailed explanations or tutoring materials generated on demand.

- For generating engaging product descriptions or other specialized marketing content.

- When processing very large documents that demand extended context capabilities without manual segmentation.

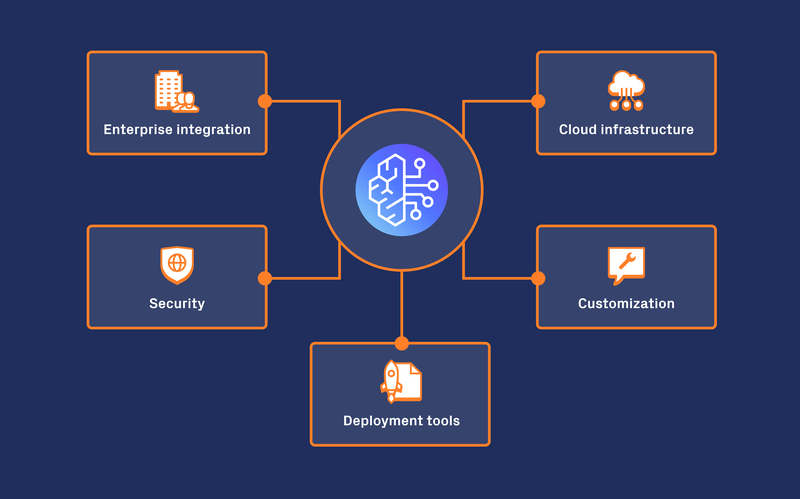

Bedrock & AWS | Amazon AI Services

Amazon Bedrock is a fully managed service designed to simplify the integration and deployment of generative AI applications by providing access to foundation models (FMs) from multiple AI providers (including Anthropic, AI21 Labs, Stability AI, Meta (LLaMA), and Mistral) through a single API on AWS.

While Bedrock itself does not have a fully free tier, many AWS services offer free-tier usage that can support experimentation and prototyping when combined with Bedrock. This makes it possible for developers and businesses to try out AI workloads at limited scale with minimal initial cost.

Key Features and Services Offered

- Access to multiple third-party foundation models: enabling users to select AI engines best suited to their needs without managing individual models.

- Amazon Comprehend: for natural language processing tasks such as sentiment analysis, entity recognition, and topic modeling.

- Amazon Polly: converts text into lifelike speech, supporting text-to-speech applications.

- Amazon Lex: facilitates building of conversational interfaces such as voice and chatbots, powering interactive virtual agents.

- Generative Video AI Models: emerging AWS capabilities in generative video support creative industries with innovative content creation opportunities.

- Security and Compliance: all AI services integrate with AWS’s enterprise-grade security, data privacy, and compliance infrastructure.

Bedrock & AWS – Pricing Overview

Amazon Bedrock’s pricing is usage-based and includes multiple cost components:

- On-Demand Pricing: pay only for tokens processed during inference (input and output tokens), image generations, or embeddings requests. Ideal for variable workloads or experimentation.

- Provisioned Throughput: subscription-style model where users reserve dedicated capacity with hourly fees, suitable for predictable, high-volume enterprise workloads.

- Model-Dependent Costs: different foundation models (e.g., Meta LLaMA, Anthropic Claude, AI21 Labs Jamba) have distinct pricing tiers reflecting their computational requirements.

- Additional Charges: fees for data storage, model customization, and data transfer apply depending on usage patterns.

For example, token processing costs are typically calculated per 1,000 tokens, while image generations incur charges per item. Provisioned throughput rates vary by model and usage commitment.

Free Tier and Experimentation

- AWS offers a free tier for many supporting services (Lambda, S3, CloudWatch, etc.) which can indirectly enable low-cost experimentation with Bedrock-based AI workflows.

- Some AI models integrated with Bedrock offer limited free access or trials, allowing organizations to prototype AI-powered features before scaling or committing financially.

Business Applications

AWS Bedrock and associated AI services are widely used for:

- Workflow automation including text processing, document understanding, and customer service enhancement.

- Advanced conversational AI powering chatbots, voice assistants, and virtual agents.

- Insights extraction via sentiment analysis, named entity recognition, and summarization.

- Multimedia content generation, especially with emerging video generation capabilities.

- Scalable AI deployments that leverage AWS’s worldwide cloud infrastructure for reliability and performance.

DeepSeek-V3 & R1 Model

Created by a Chinese AI startup, DeepSeek AI models have rapidly become prominent players in the AI industry, widely recognized for their strong performance and cost efficiency.

DeepSeek offers advanced natural language processing models such as DeepSeek-V3 (text generation and conversational tasks) and DeepThink (R1) (advanced reasoning and code generation), which utilize Mixture of Experts (MoE) architecture to optimize inference speed and energy use. Their models are evolving to include multimodal capabilities, expanding value in creative fields requiring realistic image and audio generation.

DeepSeek enables self-hosting and fine-tuning on private infrastructure, supporting industries with stringent privacy needs like healthcare and finance. While local use can be free with proper hardware, cloud or platform access typically involves subscription or pay-per-use fees.

Although DeepSeek is gaining as a cost-effective and competitive AI alternative alongside OpenAI, Google DeepMind, and Anthropic, some concerns exist regarding data privacy and security compliance. Organizations prioritizing these aspects may wish to consider models that explicitly adhere to robust constitutional AI principles.

DeepSeek is designed for various AI applications, including:

- Natural Language Processing (NLP),

- Advanced Reasoning & Problem-Solving,

- AI Research & Open-Source Innovation,

- Business & Industry Applications.

In short:

DeepSeek models can be free if used locally with the proper hardware. Cloud or platform access usually involves pay-per-use pricing or requires a subscription.

It is also worth noting that DeepSeek AI models are burdened by controversies over sufficient data privacy security. If this is one of your priorities, you may want to focus on models respecting all constitutional AI principles.

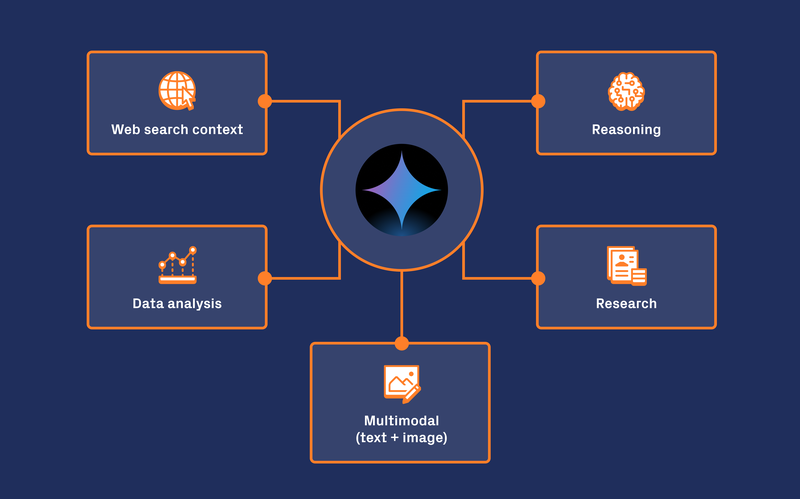

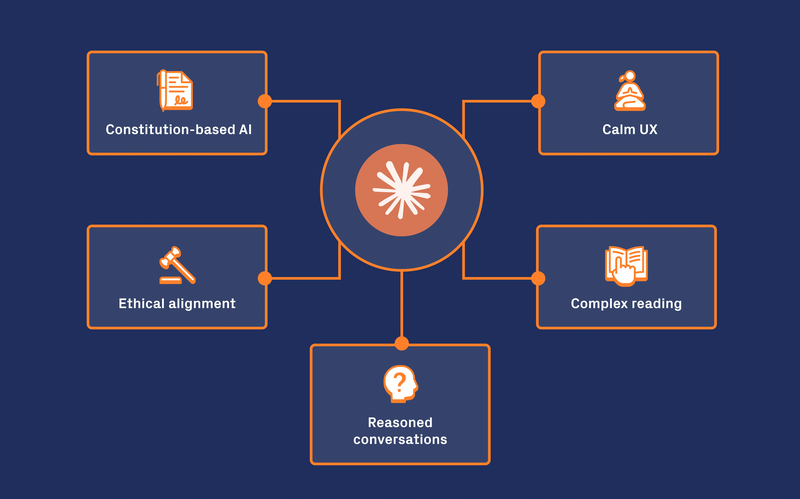

Claude 3 Models for Natural Language Processing | Anthropic

Anthropic has developed a leading family of AI models under the Claude 3 banner, with Claude 3 Opus and the newer, more advanced Claude 3.5 Sonnet at the forefront. These models excel across a wide range of tasks, from natural language understanding and generation to creative and coding workflows.

Overview of Claude 3 Models

- Claude 3 Opus is optimized for general-purpose NLP tasks, including conversational AI, customer support, text summarization, and question answering. It strikes a strong balance between accuracy, efficiency, and coherent dialogue, and can be fine-tuned for domain-specific applications.

- Claude 3.5 Sonnet builds on Opus with significantly enhanced capabilities in reasoning, coding, and multimodal processing, making it well suited for more complex, creative, and multi-step problem-solving tasks.

Claude 3.5 Sonnet Highlights

- State-of-the-art reasoning and knowledge: Claude 3.5 Sonnet sets new benchmark standards for graduate-level reasoning, advanced problem-solving, and team collaboration tasks. It can manage nuanced instructions with humor and creativity.

- Superior coding ability: it dramatically outperforms earlier Claude versions and competing models in software engineering evaluations, capable of writing, debugging, translating, and executing code autonomously. In one internal benchmark, Sonnet solved 64% of coding problems versus 38% for Opus.

- Computer Use Capability: Claude 3.5 Sonnet features a novel “computer use” mode enabling it to interact with software interfaces much like a human user—controlling cursors, clicking buttons, typing text, and automating multi-step workflows across GUI applications. This boosts its utility in automation and IT support scenarios.

- Multimodal Vision: Sonnet is the most advanced vision model in the Claude family, with improved visual reasoning, accurate interpretation of charts, graphs, and text transcribed from images. This supports industries such as finance, logistics, and retail where image understanding is crucial.

- Large Context Window: Claude 3.5 Sonnet can handle up to 200,000 tokens in a single interaction, enabling the processing of extremely long documents and complex conversational histories.

- Cost & Speed: operates at double the speed of Claude 3 Opus, with cost-effective pricing ($3 per million input tokens, $15 per million output tokens), making it ideal for enterprise deployment.

- Safety & Ethical Controls: Anthropic continues to lead in AI safety with “constitutional classifiers” that monitor inputs and outputs to prevent harmful content and attempts to bypass safety mechanisms (“jailbreaking”). These built-in safeguards support responsible AI usage.

Claude AI models are generally available through platforms like Anthropic’s API or partners like Slack or Notion.

Claude – Availability

Claude models are generally accessible via:

- Anthropic API: for commercial integration and development.

- Cloud partners including Amazon Bedrock and Google Cloud’s Vertex AI.

- Platforms like Slack, Notion, and dedicated apps such as the Claude.ai web and iOS applications.

- Free access with limited rate limits is available on Claude.ai, with higher capacity available through paid subscriptions.

Key Use Cases of Claude

Claude 3 models, particularly 3.5 Sonnet, are used for:

- Conversational AI and customer support automation.

- Complex, multi-step reasoning and problem-solving.

- Advanced code generation, editing, and debugging tasks.

- Creative writing, story generation, brainstorming, and academic writing.

- Visual reasoning for charts, dashboards, and document analysis.

- Automating workflows via direct interaction with computer interfaces.

- Safe AI applications with strong ethical and safety constraints.

| Feature | Claude 3 Opus | Claude 3.5 Sonnet |

|---|---|---|

| Task Focus | General NLP tasks and coherent conversations | Advanced creative tasks, complex problem-solving |

| Reasoning & Knowledge Level | Strong but less advanced | Graduate-level reasoning; improved nuance and humor |

| Coding Capability | Good coding support | Superior coding, debugging, translation, and execution |

| Computer Use Capability | Not available | Yes; can manipulate computer interfaces |

| Multimodal (Vision) Ability | Basic vision tasks | State-of-the-art vision with improved chart/graph understanding |

| Context Window | Smaller (typical) | Up to 200K tokens |

| Speed | Baseline speed | Approximately twice as fast |

| Cost | Lower usage costs | Cost-effective but slightly higher than Opus |

| Safety Features | Constitutional classifiers | Enhanced safety, jailbreaking prevention |

Anthropic's Claude 3.5 Sonnet represents a major leap beyond Claude 3 Opus, with cutting-edge coding skills, expanded computer interface interaction, multimodal vision, and faster performance—making it ideal for demanding professional and enterprise AI applications. While Claude 3 Opus remains a capable model for general NLP, Sonnet is the recommended choice when advanced reasoning, creativity, and tool use are required.

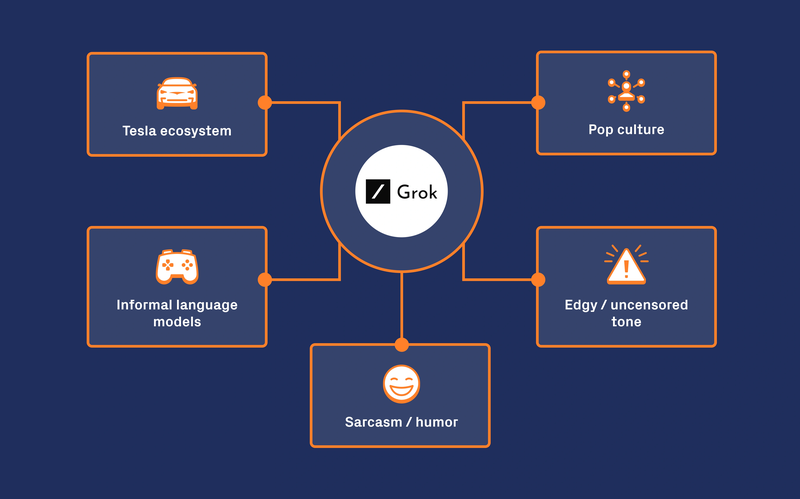

Grok is a series of large language models developed by Elon Musk’s company, xAI, with a focus on advanced conversational AI, real-time knowledge access, and deep analytical capabilities. The initial Grok 1 model debuted with a massive 314-billion-parameter Mixture-of-Experts (MoE) architecture, designed to excel in data analysis, pattern recognition, and interpretation across sectors such as finance, healthcare, and research.

Key Features and Evolution

- Integration with X (formerly Twitter): Grok’s main advantage is its deep integration with Musk’s social media platform X, allowing it to pull in real-time, up-to-date information from the web and social feeds. This continuous knowledge feed avoids the knowledge cutoff limitation of many other AI models.

- Advanced Conversational Abilities: Grok supports nuanced conversations with a personality characterized by wit and humor, making interactions engaging beyond purely factual exchanges.

- Grok 1.5: an improved version of the original model, released in 2024, offering:

- enhanced reasoning capabilities,

- massive 128,000-token context window, allowing for understanding and analyzing very long documents and conversations without losing context (a feature well beyond most competitors like GPT-4 and Claude),

- multimodal capabilities, including image recognition and document comprehension (e.g., diagrams, PDFs).

- Standalone Apps: xAI launched dedicated Grok apps for iOS and web, allowing users to interact with Grok outside of X, expanding accessibility.

- Grok 3 and Grok 4 Series (2025):

- Grok 3 introduced upgrades in training data volume and reasoning, outperforming GPT-4o on several benchmarks. It emphasized advanced “Think” and “Big Brain” modes for step-by-step problem-solving, although some features remained internal.

- Grok 4 and Grok 4 Heavy are the latest models focusing on multimodal mastery, full real-time web knowledge, and multi-agent reasoning systems that collaboratively solve complex problems. Grok 4 supports text, images, and audio inputs simultaneously and includes new features like quantum simulation support in its Heavy variant.

- Grok 4 maintains its trademark “rebellious and witty” personality and supports voice interaction with multilingual capabilities.

Access and Usage of Grok

- Free Access: Grok is available for free with limited interaction caps to all users on X, enabling widespread adoption.

- Subscription Tiers: full-featured access, including Grok 4 and Heavy, is bundled into X Premium or Twitter Blue subscriptions providing enhanced AI features, greater usage limits, and better platform integrations.

- Enterprise API: xAI is rolling out enterprise-grade API access for Grok, allowing businesses to integrate Grok intelligence directly into their apps and services, backed by Oracle Cloud Infrastructure and other partners.

Grok: Strengths and Applications

- Real-Time Knowledge: Grok stands out for its ability to provide current information, making it useful for financial forecasts, news monitoring, and dynamic research applications.

- Large-Scale Analytical Tasks: the expanded context and multimodal understanding enable Grok to tackle complex, multi-document analysis, coding from diagrams, and data-driven decision-making.

- Humorous and Relatable Personality: Grok’s chat style is more casual and irreverent compared to some competitors, appealing to users seeking engaging conversation.

- Competing Models: xAI positions Grok as a direct competitor to OpenAI’s GPT-4/5, Google’s Gemini, and Anthropic’s Claude, with unique strengths in real-time data and multi-agent collaborative reasoning.

Grok’s journey from the original massive MoE model to today’s multimodal, multi-agent AI framework places it at the forefront of advanced conversational and analytical AI in 2025. Its distinctive integration with X and emphasis on real-time information access provide advantages in timeliness and relevance unmatched by many rivals. Freely accessible with optional premium tiers, Grok blends powerful reasoning, extensive context length, multimodal inputs, and an engaging personality for users ranging from casual chatters to data analysts and enterprise customers.

Understanding Open-Source AI Models

Open-source AI models have transformed how teams build and scale AI projects. Leading models, including Meta’s LLaMA 3.x, Google’s Gemma 2, Mistral, and DeepSeek, offer powerful, customizable, and efficient AI capabilities as alternatives to proprietary solutions. Backed by active global communities, these models continue to improve, supporting tasks like content creation, sentiment analysis, natural language processing, code generation, and multimodal applications (text, image, audio).

Open-source models provide full transparency: users can inspect the code, fine-tune it for specific needs, and deploy it on-premises or in the cloud, offering strong privacy and flexibility benefits ideal for industries with strict compliance requirements.

In contrast, proprietary models, such as OpenAI’s GPT-5, Anthropic’s Claude 3.7, and Google’s Gemini 2.5, often deliver the highest performance out-of-the-box and integrate tightly with cloud services but have stricter usage controls and dependency on vendor support.

Licensing in Open-Source AI Remains a Critical Aspect

Common open-source licenses like Apache 2.0, MIT, and CreativeML OpenRAIL-M define legal boundaries for use, modification and distribution. Careful review ensures compliance for commercial and internal applications, safeguarding your business and fostering ethical AI development.

Together with vibrant community ecosystems, open-source AI continues to push innovation, fairness, and accessibility, allowing businesses to adopt cutting-edge technology with control and transparency.

This update reflects the current landscape of open-source AI models, their growing capabilities, community-driven improvements, and how they compare with proprietary models in 2025.

Free AI Tools for Business in 2025

Google Cloud continues to offer a robust suite of free AI tools designed to help businesses explore, experiment, and integrate artificial intelligence into their workflows. Core services like Translation, Speech-to-Text, Natural Language Processing, and Video Intelligence provide capabilities such as multilingual communication, audio transcription, sentiment analysis, and video content understanding, with free usage tiers that enable cost-effective adoption.

Free AI Writing Tools

Gemini Code Assist and Gemini for Workspace are two free AI writing tools offered by Google Cloud. Gemini Code Assist assists in writing and developing code, making it a valuable resource for developers working on coding tasks. It can help with code completion, debugging, and generating code snippets, streamlining the development process. Gemini for Workspace integrates with Docs to help users write and develop web pages, business proposals, and other content via a conversational interface. This tool can enhance productivity by providing real-time suggestions and improvements, ensuring high-quality content creation.

NotebookLM is another free AI tool that allows users to create a personalized AI research assistant. It uses Gemini 1.5’s multimodal understanding capabilities to summarize uploaded source material and surface interesting insights between topics. With Audio Overview, users can turn their sources into engaging “Deep Dive” discussions—similar to a podcast. This tool is particularly useful for researchers and content creators who need to synthesize large volumes of information quickly.

Google AI Studio is a web-based tool that lets users prototype, run prompts right in their browser, and experiment with the Gemini Developer API. It offers free tiers across the family of multimodal generative AI models, making it easy to start building with Gemini. This platform is ideal for developers and businesses looking to explore the capabilities of generative AI without significant upfront investment.

Vertex AI is a unified platform for building and leveraging generative AI. It offers a range of tools and services, including text, chat, and code generation, starting at $0.0001 per 1,000 characters. New Google Cloud customers get $300 free credits to use towards Vertex AI. This platform provides a comprehensive suite of AI tools that can help businesses automate workflows, enhance customer interactions, and extract valuable insights from data.

Overall, these free AI tools can help businesses and individuals explore and assess the power of AI, experiment with new ideas, and discover the potential of generative AI models.

AI Ethics in 2025

AI ethics means making sure AI tools are fair, transparent, and respect people’s rights. It matters because it builds trust, protects your reputation, and helps you stay compliant. When AI is used in business, it needs to reflect your values, not just technical goals.

Why Ethical AI Matters

Ethical AI helps reduce bias in decision-making. That means fewer risks, fewer legal issues, and better outcomes for everyone. It also makes how AI works easier to explain, which builds trust with users. Respect for privacy and autonomy isn’t optional – it’s key to long-term success.

Bias and Fairness in AI

Bias in AI comes from data. To build fair models, teams use techniques like data preprocessing, regularization, and fairness metrics. These help clean the data, avoid overfitting, and check if the model treats all groups equally. Open source AI models, especially, need this kind of care – they’re often reused at scale and across industries.

Community forums and AI platforms like Hugging Face play a big role here. They help test, share, and improve fairness across text-to-image models, code generation tools, and large language models. These resources also guide ethical decisions around commercial use and public release.

How to Evaluate AI Models

Good AI models aren’t just accurate. They also need to be reliable, efficient, and fair. Here’s what to look for:

- Accuracy – Can the model make the right predictions?

- Efficiency – Does it work fast enough for real-world use?

- Reliability – Are the results consistent over time?

- Bias and Fairness – Does it treat all users fairly?

- Interpretability – Can you explain why it made a decision?

- Robustness – Can it handle edge cases or attacks?

- Scalability – Will it hold up under more data?

- Explainability – Can non-technical users understand it?

This applies across many types of tools: text prompts, video generation, speech recognition, code completion, sentiment analysis, and more. Whether you're working with multilingual translation, retrieval-augmented generation, or Gemini models, you need to test them against real-world use and specific needs.

Continuous Improvement

AI models aren’t one-and-done. They must continually improve. Free AI tools, especially in open source, give users a way to explore and adapt models for specialized tasks. External data sources help fine-tune them. Free tiers and larger models support experimentation and scaling.

If you care about high performance, deep understanding, and high quality visuals – especially with image generation and realistic visuals – then ethical evaluation is a must. That’s how you get lifelike images, accurate content creation, and reliable code generation at scale.

Key Considerations for AI Model Selection [2025 Update]

Here are a few things worth assessing before you choose the AI model for your business:

- Task Requirements: clearly define your business problem and the primary use case (e.g., automation, analytics, creative work, customer engagement).

- Total Cost: compare not just per-token rates but total ownership costs, cloud vs. local deployment, licensing, and customization.

- Input/Output Limits: check context window size: modern models can process very large documents, but limits still matter for some open-source options.

- Features: assess support for multimodal (text, image, audio), advanced code execution, tool integration, and workflow automation.

- Privacy and Security: for sensitive data, favor models with on-premises, edge deployment, or federated learning; verify data residency, regulatory compliance, and model transparency.

- Performance and Efficiency: smaller models offer speed and low cost; frontier models (GPT-5, Claude 3.5, Gemini 2.0) deliver top capabilities but may incur throttling or higher cost.

- Task-Specific Benchmarking: some models excel at certain tasks—Claude for document/coding, Gemini for vision/graphing, GPT-5 for agentic workflows, DeepSeek for cost-efficient NLP.

- Data Analysis: check compatibility with data formats and the ability to process complex datasets (statistical analysis, chart/image interpretation).

- Format Support: confirm ability to handle PDFs, images, spreadsheets, and documents as needed.

- Ethics and Governance: ensure model selection aligns with business values, fairness, and legal compliance; prefer models with robust governance and explainability.

So, choose wisely!

Click here to see a detailed comparison of AI models >

Free AI Models for Business | 2025 Summary

In conclusion, 2025 has ushered in a new era of AI innovation, with models that are more powerful, efficient, and accessible than ever before. If you're about to choose one AI model for your business, start with our list of necessary assessments. With this article in your hand, you should be able to move forward confidently.

Let’s talk about which AI model will work for your business – no jargon, just clear answers.

![[August'25 Update] Top Free AI Models for Business | Automation and LLM's in 2025 banner](/media/images/Banner_Desktop_aut5.format-png.width-1400.webpquality-90.png)

![[August'25 Update] Top Free AI Models for Business | Automation and LLM's in 2025](/media/images/Img_1.format-png.width-72.webpquality-90.png)